Meta AI Research has released Segment Anything, a model that can do automatic semantic segmentation of images without prior training for a specific application. The code, pre-trained models, and the training dataset are publicly available. After testing a few images on the website, I installed it as per the instructions on the Github page.

I wanted to try it on ProRail’s high-resolution nadir and oblique aerial imagery, which is available to download from SpoorInBeeld.nl. I wrote a Python program that loads the images and then moves a sliding window over them to obtain 1024×1024 pixel images, which is a much more convenient size for further processing than the original images (which can be up to 150 Megapixels in size). The smaller images are run through the segmentation and then, for now, stored with the mask overlay. For further processing it would make more sense to cut out each mask.

Here’s an image showing cars on a highway:

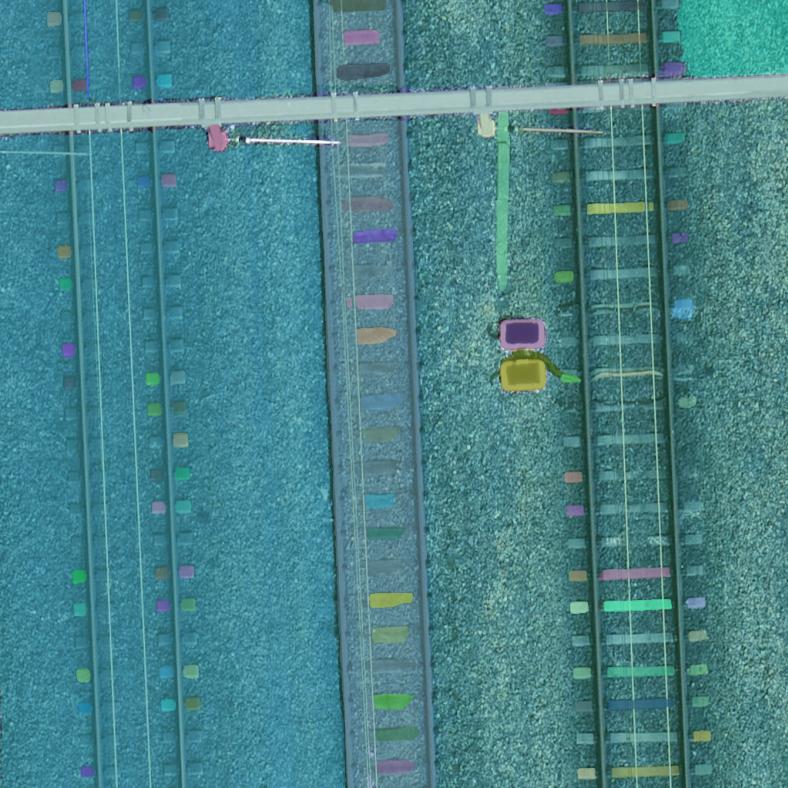

And here’s a piece of railroad track:

And here’s a piece of railroad track:

I think that the segmentation does a pretty good job. The interrupted paint stripes are all separate segments, as are the individual sleepers – but the ballast bed in between rails is one continuous surface, and the two rail coils in the lower image have been very nicely detected. I was about to complain about oversegmentation, but on second consideration this model does a very good job of splitting actual objects from featureless areas.

I think that the segmentation does a pretty good job. The interrupted paint stripes are all separate segments, as are the individual sleepers – but the ballast bed in between rails is one continuous surface, and the two rail coils in the lower image have been very nicely detected. I was about to complain about oversegmentation, but on second consideration this model does a very good job of splitting actual objects from featureless areas.

Here’s an oblique image of cars on the highway:

In how many pieces do you want your vehicle? 🙂 The segmentation is very detailed, with individual doors and windows being picked out.If you would only want to detect cars, this would definitely be oversegmented – but then this could probably be solved by downsampling the image to lower resolution.

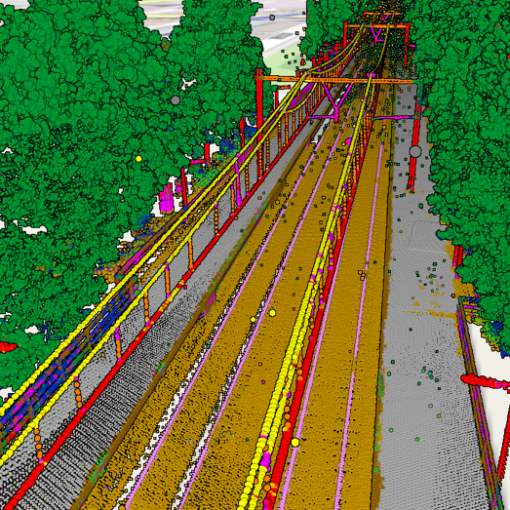

And here’s an oblique image of the railroad track:

The most important thing here to me is that the catenary pole appears to be considered a single object (as is the lamp pole excluding the light fixture).

The most important thing here to me is that the catenary pole appears to be considered a single object (as is the lamp pole excluding the light fixture).

The question is then of course what practical applications this approach has, as it does not tell you what is has found. The big advantage is that it does indeed segment anything, without the need to first assemble a domain-specific training dataset and train a custom model. You can filter the masks by size to only retain segments that are of interest, and then run the segments through a classifier model – without the need to first to object detection with a custom model. Some of the oversegmentation could probably also be dealt with by lowering the image resolution.

All things considered this is a very interesting development that clearly has its uses for object detection and classification in images.